ScalePool: Hybrid XLink-CXL Fabric for Composable Resource Disaggregation in Unified Scale-up Domains

Hyein Woo, Miryeong Kwon, Jiseon Kim, Eunjee Na, Hanjin Choi, Seonghyeon Jang, Myoungsoo Jung

DIMES

2025

Research Areas

Abstract

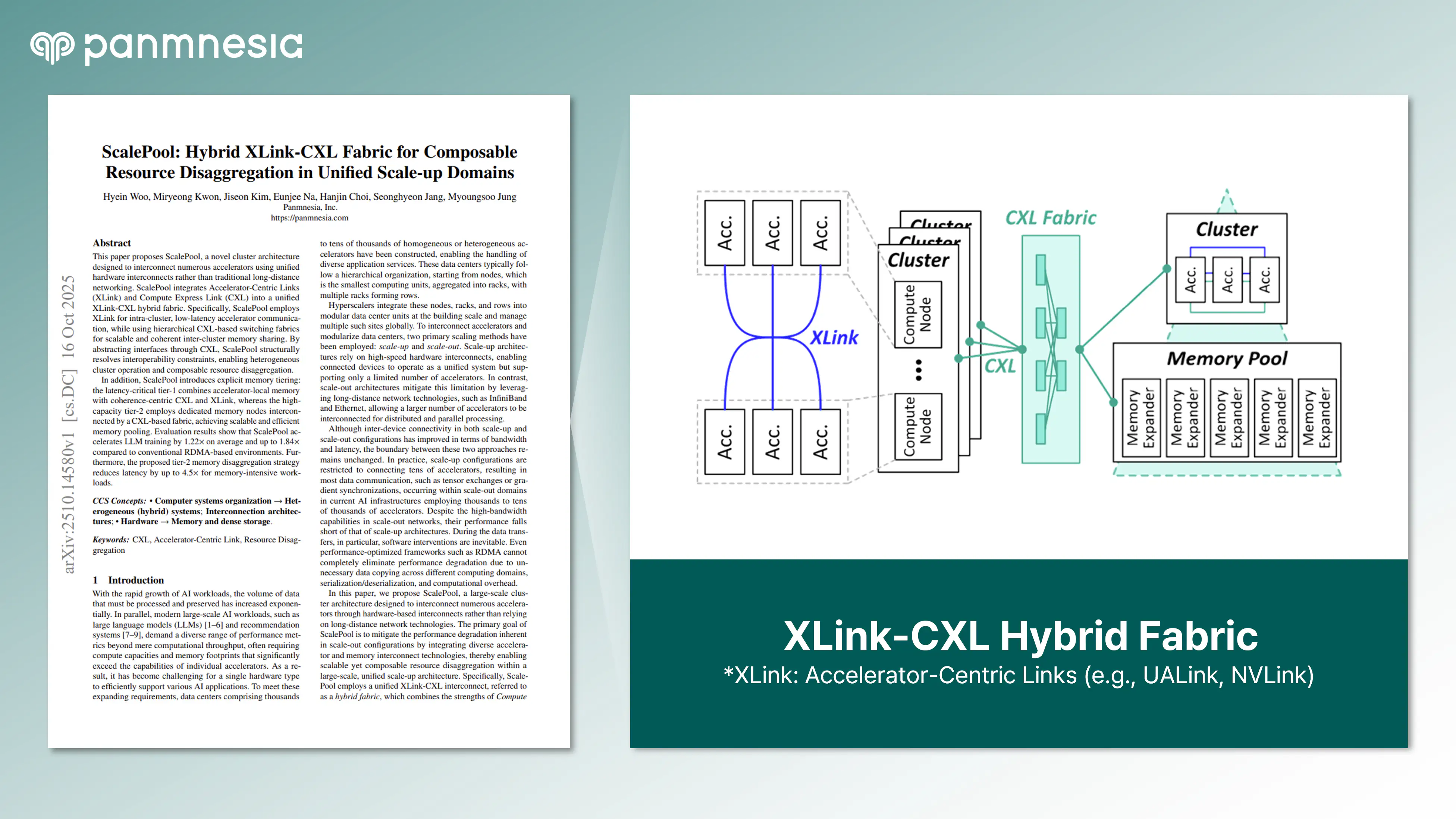

This paper proposes ScalePool, a novel cluster architecture designed to interconnect numerous accelerators using unified hardware interconnects rather than traditional long-distance networking. ScalePool integrates Accelerator-Centric Links (XLink) and Compute Express Link (CXL) into a unified XLink-CXL hybrid fabric. Specifically, ScalePool employs XLink for intra-cluster, low-latency accelerator communication, while using hierarchical CXL-based switching fabrics for scalable and coherent inter-cluster memory sharing. By abstracting interfaces through CXL, ScalePool structurally resolves interoperability constraints, enabling heterogeneous cluster operation and composable resource disaggregation. In addition, ScalePool introduces explicit memory tiering: the latency-critical tier-1 combines accelerator-local memory with coherence-centric CXL and XLink, whereas the highcapacity tier-2 employs dedicated memory nodes interconnected by a CXL-based fabric, achieving scalable and efficient memory pooling. Evaluation results show that ScalePool accelerates LLM training by 1.22x on average and up to 1.84x compared to conventional RDMA-based environments. Furthermore, the proposed tier-2 memory disaggregation strategy reduces latency by up to 4.5x for memory-intensive workloads.