MPI-over-CXL: Enhancing Communication Efficiency in Distributed HPC Systems

Miryeong Kwon, Donghyun Gouk, Hyein Woo, Junhee Kim, Jinwoo Baek, Kyungkuk Nam, Sangyoon Ji, Jiseon Kim, Hanyeoreum Bae, Junhyeok Jang, Hyunwoo You, Junseok Moon, Myoungsoo Jung

SPICE

2025

Research Areas

Abstract

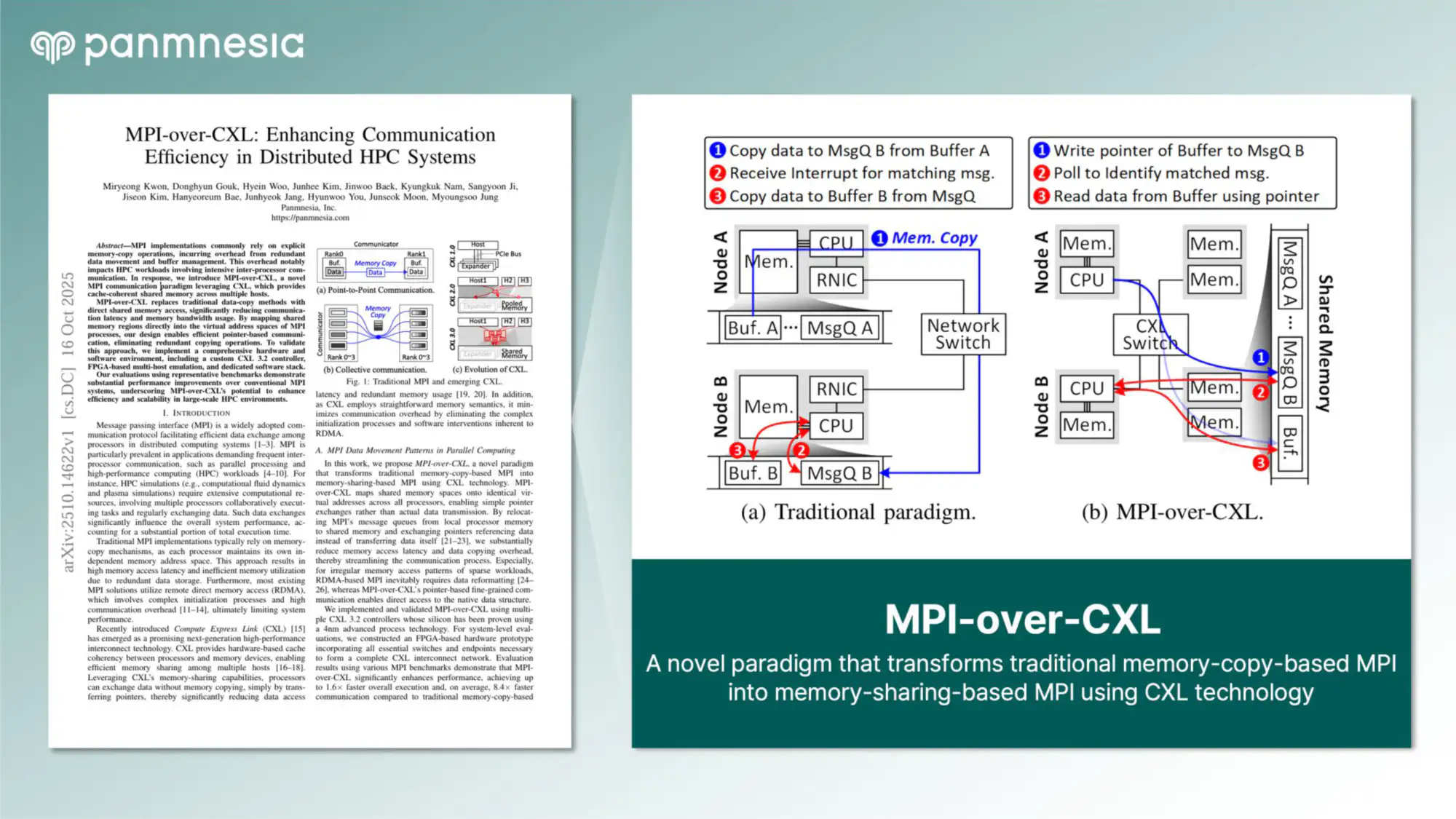

MPI implementations commonly rely on explicit memory-copy operations, incurring overhead from redundant data movement and buffer management. This overhead notably impacts HPC workloads involving intensive inter-processor communication. In response, we introduce MPI-over-CXL, a novel MPI communication paradigm leveraging CXL, which provides cache-coherent shared memory across multiple hosts. MPI-over-CXL replaces traditional data-copy methods with direct shared memory access, significantly reducing communication latency and memory bandwidth usage. By mapping shared memory regions directly into the virtual address spaces of MPI processes, our design enables efficient pointer-based communication, eliminating redundant copying operations. To validate this approach, we implement a comprehensive hardware and software environment, including a custom CXL 3.2 controller, FPGA-based multi-host emulation, and dedicated software stack. Our evaluations using representative benchmarks demonstrate substantial performance improvements over conventional MPI systems, underscoring MPI-over-CXL's potential to enhance efficiency and scalability in large-scale HPC environments.